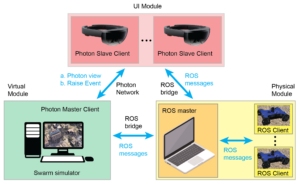

System architecture for virtual-physical hybrid swarm simulation

This paper introduces a hybrid robotic swarm system architecture that combines virtual and physical components and enables human–swarm interaction through mixed reality (MR) devices. The system comprises three main modules: (1) the virtual module, which simulates robotic agents, (2) the physical module, consisting of real robotic agents, and (3) the user interface (UI) module. To facilitate communication between the modules, the UI module connects with the virtual module using Photon Network and with the physical module through the Robot Operating System (ROS) bridge. Additionally, the virtual and physical modules communicate via the ROS bridge. The virtual and physical agents form a hybrid swarm by integrating these three modules. The human–swarm interface based on MR technology enables one or multiple human users to interact with the swarm in various ways. Users can create and assign tasks, monitor real-time swarm status and activities, or control and interact with specific robotic agents. To validate the system-level integration and embedded swarm functions, two experimental demonstrations were conducted: (a) two users playing planner and observer roles, assigning five tasks for the swarm to allocate the tasks autonomously and execute them, and (b) a single user interacting with the hybrid swarm consisting of two physical agents and 170 virtual agents by creating and assigning a task list and then controlling one of the physical robots to complete a target identification mission.